LlamaSherpa: Document Chunking for LLMs

Smart Chunking Techniques for Enhanced RAG Pipeline Performance

You could naively chunk your documents — a straightforward method of breaking down large documents into smaller text chunks without considering the document’s inherent structure or layout.

Going this route, you divide the text based on a predetermined size or word count, such as fitting within the LLM context window (typically 2000–3000 words). The problem is that you can disrupt the semantics and context implied by the document’s structure.

Ambika Sukla proposes a solution called “smart chunking” that is layout-aware and considers the document’s structure.

This method:

- Is aware of the document’s layout structure, preserving the semantics and context.

- Identifies and retains sections, subsections, and their nesting structures.

- Merges lines into coherent paragraphs and maintains connections between sections and paragraphs.

- Preserves table layouts, headers, subheaders, and list structures.

To this end, he’s created the LlamaSherpa library, which has a “LayoutPDFReader,” a tool designed to split text in PDFs into these layout-aware chunks, providing a more context-rich input for LLMs and enhancing their performance on large documents.

Let’s get some preliminaries out of the way:

%%capture

!pip install llmsherpa openai llama-index

from llmsherpa.readers import LayoutPDFReader

import openai

import getpass

from IPython.core.display import display, HTML

from llama_index.llms import OpenAI

openai.api_key = getpass.getpass("Whats your OpenAI Key:")

The following code sets up a PDF reader with a specific parser API endpoint, provides it a source (URL or path) to a PDF file, and instructs it to fetch, parse, and return the structured content of that PDF.

llmsherpa_api_url = "https://readers.llmsherpa.com/api/document/developer/parseDocument?renderFormat=all"

pdf_url = "https://arxiv.org/pdf/2310.14424.pdf" # also allowed is a file path e.g. /home/downloads/xyz.pdf

pdf_reader = LayoutPDFReader(llmsherpa_api_url)

doc = pdf_reader.read_pdf(pdf_url)

Step-by-Step Explanation of what just happened under the hood

Setting API Endpoint: The llmsherpa_api_url variable is assigned the URL of the external parser API. This API is responsible for parsing the PDF files.

Setting PDF Source: The pdf_url variable is given a URL pointing to a PDF file. However, as mentioned, it can also be assigned a local file path.

Initializing the PDF Reader:

- The

LayoutPDFReaderclass is initialized with thellmsherpa_api_url. This tells the reader which API to use for parsing PDFs. - Internally, during this initialization, the class sets up two HTTP connection pools using

urllib3: - One for downloading PDFs (

self.download_connection). - Another for sending PDFs to the external parser API (

self.api_connection).

Reading and Parsing the PDF:

The read_pdf method of the pdf_reader object is invoked with the pdf_url as its argument.

Inside this method:

- The class determines if the input is a URL or a local file path.

- If it’s a URL, the

_download_pdfmethod is invoked to fetch the PDF file from the given URL. It uses thedownload_connectionto make the HTTP request, impersonating a browser user agent to avoid potential download restrictions. - The file is read directly from the system if it’s a local path.

- Once the PDF file data is obtained, the

_parse_pdfmethod is called to send this data to the external parser API (in this case, thellmsherpaAPI). - The API processes the PDF and returns a JSON response with parsed data.

- The JSON response is then processed to extract the ‘blocks’ of data, representing structured information parsed from the PDF.

- These blocks are finally returned as a

Documentobject.

Result: The doc variable now holds a Document object that contains the structured data parsed from the PDF. This Document object can be used to access and manipulate the parsed information.

Want to learn how to build modern software with LLMs using the newest tools and techniques in the field? Check out this free LLMOps course from industry expert Elvis Saravia of DAIR.AI!

What we end up with a type of Document object with several methods available to it.

type(doc)

# llmsherpa.readers.layout_reader.Document

Retrieving Chunks from the PDF

The chunks method provides coherent pieces or segments of content from the parsed PDF.

for chunk in doc.chunks():

print(chunk.to_text())

Extracting Tables from the PDF

The tables method enables you to retrieve tables identified and extracted from the PDF.

for table in doc.tables():

print(table.to_text())

Accessing Sections of the PDF

The sections method allows you to segment the content of the parsed PDF. This is especially handy if you want to navigate or read specific chapters, sub-chapters, or other logical divisions in the document.

for section in doc.sections():

print(section.title)

In the code snippet below, you’ll search for a section titled ‘2 Methodology’ in a parsed PDF document that displays its complete content, including all subsections and nested content.

It does so from a parsed PDF document using the llmsherpa.readers.layout_reader library.

- The variable

selected_sectionis initialized toNoneand acts as a placeholder for the desired section. - The code iterates over all sections in the

doc(aDocumentobject) using thesections()method. - During the iteration, if a section with the title ‘2 Methodology’ is found, the

selected_sectionvariable is updated to reference this section, and the loop is immediately exited. - The

to_htmlmethod is then used to generate an HTML representation of theselected_section. By setting bothinclude_children=Trueandrecurse=True.The generated HTML will include the immediate child elements of the section and all of its descendants. This ensures a comprehensive view of the section and its sub-content. - Finally, the

HTMLfunction is used to display the section in a Jupyter Notebook.

def get_section_text(doc, section_title):

"""

Extracts the text from a specific section in a parsed PDF document.

Parameters:

- doc (Document): A Document object from the llmsherpa.readers.layout_reader library.

- section_title (str): The title of the section to extract.

Returns:

- str: The HTML representation of the section's content, or a message if the section is not found.

"""

selected_section = None

# Find the desired section by title

for section in doc.sections():

if section.title == section_title:

selected_section = section

break

# If the section is not found, return a message

if not selected_section:

return f"No section titled '{section_title}' found."

# Return the full content of the section as HTML

return selected_section.to_html(include_children=True, recurse=True)

You can see the text in any given section like so:

section_text = get_section_text(doc, '2 Methodology')

HTML(section_text)

And you can use that text as context for an LLM:

def get_answer_from_llm(context, question, api_instance):

"""

Uses an LLM to answer a specific question about the provided context.

Parameters:

- context (str): The text or content to analyze.

- question (str): A question to answer about the context.

- api_instance: An instance of the API (e.g., OpenAI) used to generate the answer.

Returns:

- str: The API's response text.

"""

prompt = f"Read this text and answer the question: {question}:\n{context}"

resp = api_instance.complete(prompt)

return resp.text

question = "Describe the methodology used to conduct the experiments in this research"

llm = OpenAI()

response = get_answer_from_llm(section_text, question, llm)

print(response)

Below is the summary 👇🏽

The methodology used in this research involves conducting pairwise comparisons between different models. The researchers start by selecting a set of prompts (P) and a pool of models (M). For each prompt in P and each model in M, a completion is generated. Paired model completions © are formed for evaluation, where each pair consists of completions from two distinct models. An annotator then reviews each pair and assesses their relative quality, assigning evaluation scores (ScoreAi, ScoreBi) based on their preference.

To rank the prompts in P, an offline approach is proposed. The focus is on highlighting the dissimilarity between the responses of the two models. The researchers aim to identify and rank prompts that have a low likelihood of tie outcomes, where both completions are viewed as similarly good or bad by annotators.

To achieve this, the prompts and completion pairs within P are reordered based on dissimilarity scores. An optimal permutation (π) is found to create an ordered set (P̂π) that prioritizes evaluation instances with a strong preference signal from annotators. This reordering reduces the number of annotations required to determine model preference.

Conventional string matching techniques such as BLEU and ROUGE are not suitable for this problem as they may not capture the meaning or quality of completions accurately. The researchers aim to streamline and optimize the evaluation process by strategically selecting prompts that amplify the informativeness of each comparison.

Overview of doc.tables()

The doc.tables() is designed to extract and return tables from a parsed PDF document.

Under the Hood:

- Node Traversal: The method begins by initializing an empty list,

tables, to store nodes tagged as tables. It then traverses the entire document tree, starting from the root node. - Tag-Based Identification: During traversal, the method checks the

tagattribute of each node. If a node has itstagset to'table', it is considered a table and is added to thetableslist. - Return: After traversing all nodes, the method returns the

tableslist, which contains all nodes identified as tables.

Potential Parsing Discrepancies:

- Broad Tagging: The method relies solely on the

tagattribute to identify tables. If, during the initial PDF parsing, certain non-table elements are tagged as'table'(due to layout similarities or parsing complexities), they will be incorrectly identified as tables by this method. - PDF Structure Complexity: PDFs are visually-oriented documents. Elements that appear as text or lists to human readers might be structured in a tabular manner in the underlying PDF content. This can lead the parser to tag such elements as tables, even if they don’t visually resemble tables.

- Lack of Additional Verification: The method does not employ additional checks or heuristics to verify the tabular nature of identified nodes. Implementing further criteria (e.g., checking for rows/columns or tabular data patterns) could enhance table identification accuracy.

While doc.tables() provides a straightforward way to extract tables from a parsed PDF. You should be aware of potential discrepancies in table identification due to the inherent complexities of PDF parsing and the method’s reliance on tags alone.

# how many "tables" we have

len(doc.tables())

# 13

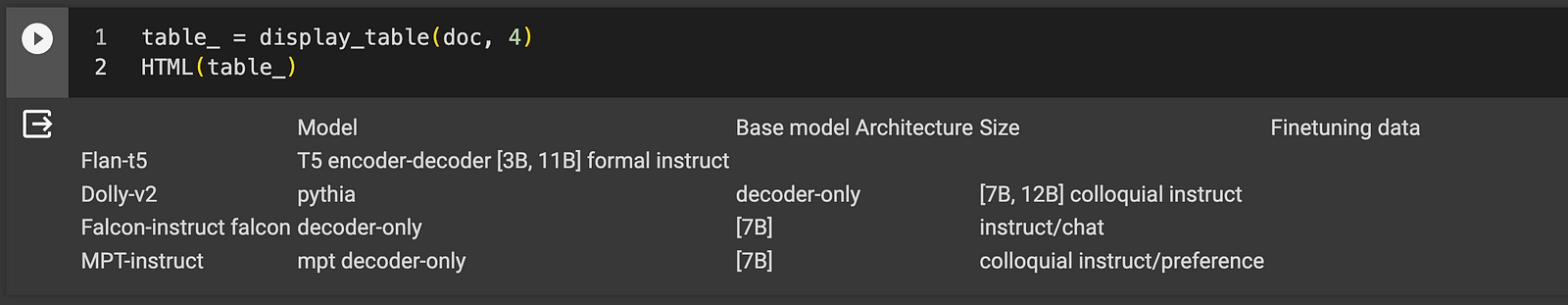

Let’s see a table and reason over it with an LLM

def display_table(doc, index):

"""

Returns the HTML representation of a specified table from a parsed PDF document.

Parameters:

- doc (Document): A Document object from the llmsherpa.readers.layout_reader library.

- index (int): The index of the table to display.

Returns:

- str: The HTML representation of the table, or a message if the table is not found.

"""

tables = doc.tables()

if index < 0 or index >= len(tables):

return "Table index out of range."

return tables[index].to_html()

table_ = display_table(doc, 4)

HTML(table_)

question = "What insight can you glean from this table?"

response = get_answer_from_llm(table_, question, llm)

print(response)

And the response from the LLM:

From this table, we can glean the following insights:

1. There are four different models mentioned: Flan-t5, Dolly-v2, Falcon-instruct falcon, and MPT-instruct.

2. Each model has a different base model architecture: T5 encoder-decoder [3B, 11B] formal instruct for Flan-t5, pythia decoder-only for Dolly-v2, decoder-only for Falcon-instruct falcon, and mpt decoder-only for MPT-instruct.

3. The size of the models varies, with Flan-t5 and Dolly-v2 not specifying the size, Falcon-instruct falcon being [7B], and MPT-instruct also being [7B].

4. The finetuning data for each model is mentioned, with Flan-t5 and Dolly-v2 not specifying any, Falcon-instruct falcon using instruct/chat, and MPT-instruct using colloquial instruct/preference.

Vector search and Retrieval Augmented Generation with Smart Chunking

LayoutPDFReader is designed to chunk text while intelligently preserving the integrity of related content.

This means that all list items, including the paragraph that precedes the list, are kept together.

In addition, items on a table are grouped, and contextual information from section headers and nested section headers is included.

By using the following code, you can create a LlamaIndex query engine from the document chunks generated by LayoutPDFReader.

from llama_index.readers.schema.base import Document

from llama_index import VectorStoreIndex

index = VectorStoreIndex([])

for chunk in doc.chunks():

index.insert(Document(text=chunk.to_context_text(), extra_info={}))

query_engine = index.as_query_engine()

def query_vectorstore(question, query_engine=query_engine):

"""

Queries a vectorstore using an engine with a question and prints the response.

Parameters:

- query_engine: The engine to use for querying (e.g., an instance of a class with a query method).

- question (str): The question to ask.

Returns:

- response: The response from vector store

"""

response = query_engine.query(question)

return response

response = query_vectorstore("What is the methodology in this paper?")

response.response

And the response from the LLM:

The methodology in this paper focuses on prioritizing evaluation instances that showcase distinct model behaviors. The goal is to minimize tie outcomes and optimize the evaluation process, especially when resources are limited. However, this approach may inherently favor certain data points and introduce biases. The methodology also acknowledges the risk of over-representing certain challenges and under-representing areas where models have consistent outputs. It is important to note that the proposed methodology is designed to prioritize annotation within budget constraints, rather than using it for sample exclusion.

response = query_vectorstore("How do you quantify A vs B dissimilarity?")

response.response

The quantification of A vs B dissimilarity can be done using the Kullback-Leibler (KL) divergence formula. This formula involves calculating the sum of the product of the probability of each element in A (pA) and the logarithm of the ratio between the probability of that element in A (pA) and the probability of that element in B (pB).

Conclusion

In summary, the blog post introduces LlamaSherpa, an innovative library that addresses the challenge of chunking large documents for use with Large Language Models (LLMs).

LlamaSherpa’s “smart chunking” method is layout-aware, preserving the semantics and structure of the original document, which is crucial for maintaining the context and meaning. The library’s LayoutPDFReader tool efficiently processes PDFs to create more effective inputs for LLMs.

By utilizing LlamaSherpa, users can enhance the performance of their RAG pipelines, ensuring that the model’s context window encapsulates the most relevant and structured information from large documents.